The Review Board is calling on Meta to update its manipulated media policy, calling the existing rules “inappropriate.” The advice comes in a closely watched decision Video of President Joe Biden.

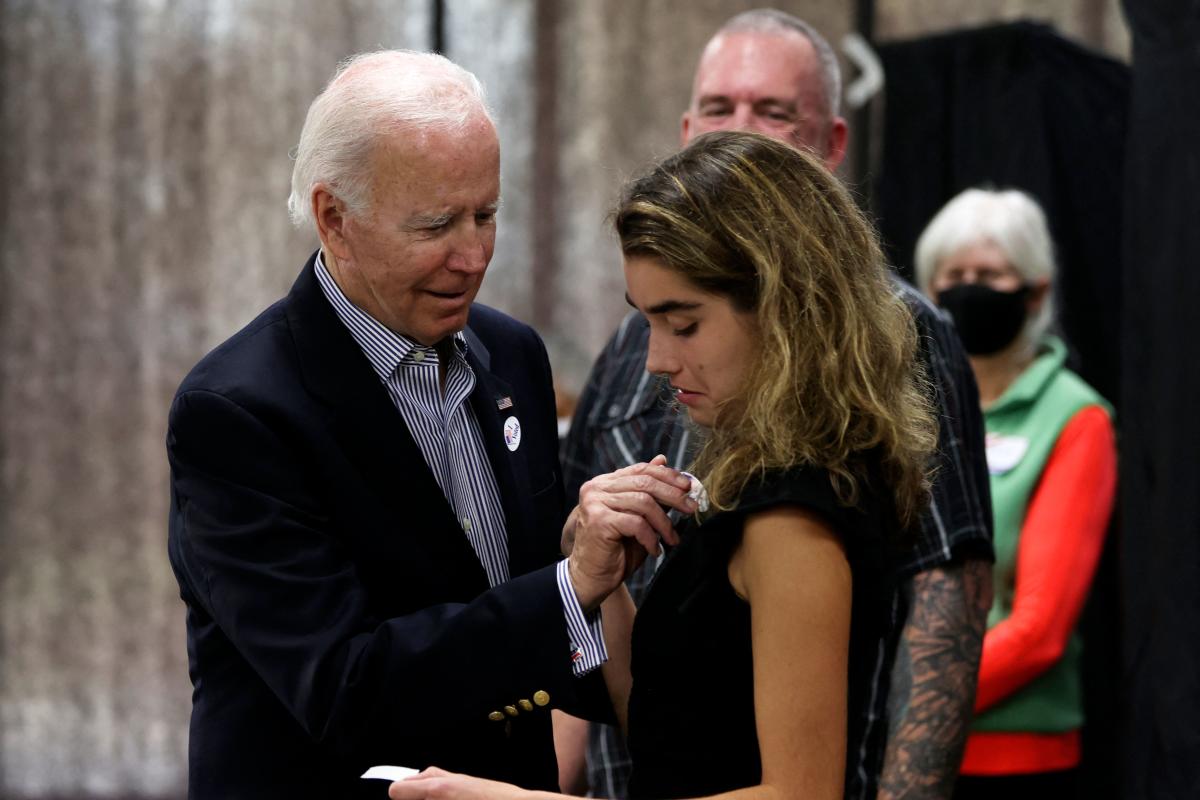

The board ultimately sided with Meta on the decision not to release the clip at the heart of the case. The video includes footage from October 2022, when the president accompanied his grandson, who voted in person for the first time. News footage after voting, he stuck an “I voted” sticker on his shirt. Later, a Facebook user shared a version that was edited to make it look like he touched her chest multiple times. The caption accompanying the clip called him a “sick pedophile” and said that those who voted for him were “psychologically deficient”.

In its decision, the Supervisory Board stated that the video did not violate Meta’s rights It manipulated media policy because it was not edited by AI tools and because the edits were “obvious and therefore unlikely to confuse most users.” “However, the Board is concerned about the Manipulated Media policy in its current form, which is inconsistent, lacks persuasive reasoning, and focuses inappropriately on how content is created (eg, election processes) rather than what specific harm it aims to prevent,” the board wrote. “Meta should quickly review this policy, taking into account the number of elections to be held in 2024.”

of the company only applies to videos edited with artificial intelligence, but do not include other types of editing that may be misleading. In its policy recommendations to Meta, the Review Board says it should write new rules covering audio and video content. The policy should apply not only to misleading speech, but also to “content that shows people things they don’t do.” The council says these rules should apply “regardless of how they are created”. Additionally, the board recommends that Meta no longer remove posts with manipulated media unless the content itself violates any other rules. Instead, the board suggests Meta “implements a label indicating that content is significantly layered and potentially misleading.”

The recommendations highlight growing concern among researchers and civil society groups about how the growth in artificial intelligence tools could create a new wave of viruses. . In a statement, a Meta spokesperson said the company is “reviewing the Board’s guidance and will respond to the public within the next 60 days.” While that response comes well before the 2024 presidential election, it’s unclear when or if the policy change will happen. Meta’s representatives said the company “plans to update its Manipulated Media policy to respond to the evolution of new and increasingly realistic AI,” the Oversight Board wrote in its decision.