A coalition of 20 technology companies signed A deal that will help prevent deep AI fraud in the critical 2024 elections in more than 40 countries on Friday. OpenAI, Google, Meta, Amazon, Adobe and X are among the companies that have joined the pact to prevent and combat AI-generated content that could influence voters. However, the agreement’s vague language and lack of mandatory enforcement call into question whether it goes far enough.

The list of companies that have signed the “Technical agreement to combat fraudulent use of artificial intelligence in the 2024 elections” includes companies that create and distribute artificial intelligence models, as well as social platforms where “deepfakes” are most visible. Signatories include Adobe, Amazon, Anthropic, Arm, ElevenLabs, Google, IBM, Inflection AI, LinkedIn, McAfee, Meta, Microsoft, Nota, OpenAI, Snap Inc., Stability AI, TikTok, Trend Micro, Truepic and X (formerly Twitter)- is ).

The group describes the agreement as “a set of commitments to deploy technology against malicious AI-generated content designed to deceive voters.” The signatories have accepted the following eight commitments:

-

Developing and deploying technology to mitigate the risks associated with deceptive artificial intelligence election content, including open source tools where applicable

-

Evaluating models within this agreement to understand the risks that deceptive artificial intelligence may present in relation to electoral content

-

They try to detect the distribution of this content on their platforms

-

They strive to appropriately address this content found on their platforms

-

Building cross-industry resilience against deceptive AI election content

-

Providing transparency to the public about how the company applies to it

-

He continues to engage with various global civil society organizations and academics

-

Supporting efforts to increase public awareness, media literacy, and community resilience as a whole

The agreement will apply to audio, video and images generated by artificial intelligence. It addresses “content that deceptively falsifies or alters the appearance, voice, or actions of political candidates, election officials, and other key stakeholders in democratic elections, or misinforms voters about when, where, and how they can vote. “

The signatories say they will work together to create and share tools to detect and address the prevalence of deep fraud online. In addition, they plan to run awareness campaigns and “provide transparency” to users.

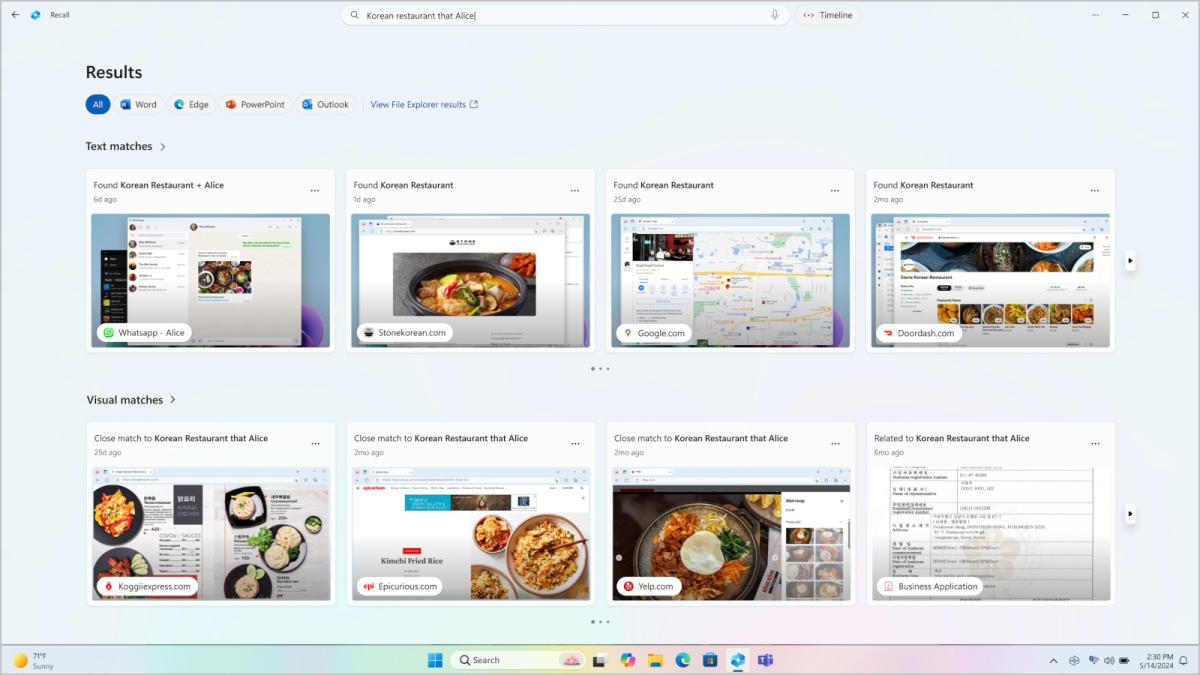

OpenAI, one of the signatories, already said this last month plans to prevent disinformation about the elections around the world. Images created by the company DALL-E 3 the tool will be encoded with a classifier that provides a digital watermark to clarify its origin, such as images generated by artificial intelligence. The ChatGPT maker said it will also work with journalists, researchers and platforms for feedback on origin classification. It also plans to prevent chatbots from impersonating candidates.

“We are committed to protecting the integrity of elections by implementing policies that prevent abuse and increasing transparency around AI-generated content,” Anna Makanju, OpenAI’s Vice President of Global Affairs, said in the group’s joint press release. “We look forward to working with industry partners, civil society leaders and governments around the world to protect elections from fraudulent use of artificial intelligence.”

Midjourney, the artificial intelligence image generator (of the same name) currently producing the most convincing fake photos, is not listed. But the company said it would do so earlier this month consider banning political pedigrees altogether during election season. Last year, Midjourney got used to it Creating a viral fake image of Pope Benedict unexpectedly walks down the street in a puffy white jacket. One of Midjourney’s closest competitors is Stability AI (creators of the open source Stable diffusion), participated. Engadget has reached out to Midjourney for comment on its absence, and we’ll update this article if we hear back.

Apple is not the only Silicon Valley “Big Five”. However, this could be explained by the fact that the iPhone maker has yet to market any generative AI products and does not host a social media platform where deep fakes can be spread. Regardless, we’ve reached out to Apple PR for clarification, but have not heard back at the time of publication.

Although the general principles agreed upon by 20 companies sound like a promising start, it remains to be seen whether a loose set of unenforceable agreements will be enough to combat the nightmare scenario in which the world’s bad actors use generative artificial intelligence to sway public opinion. Aggressively elect anti-democratic candidates in the US and elsewhere.

“The language is not as strong as expected,” said Rachel Orey, senior director of the Bipartisan Policy Center’s Elections Project. he said Associated Press On Friday. “I think we have to give credit where credit is due and recognize that companies have a vested interest in not being used to undermine free and fair elections. He said that it is voluntary and we will pay attention to whether they comply or not.”

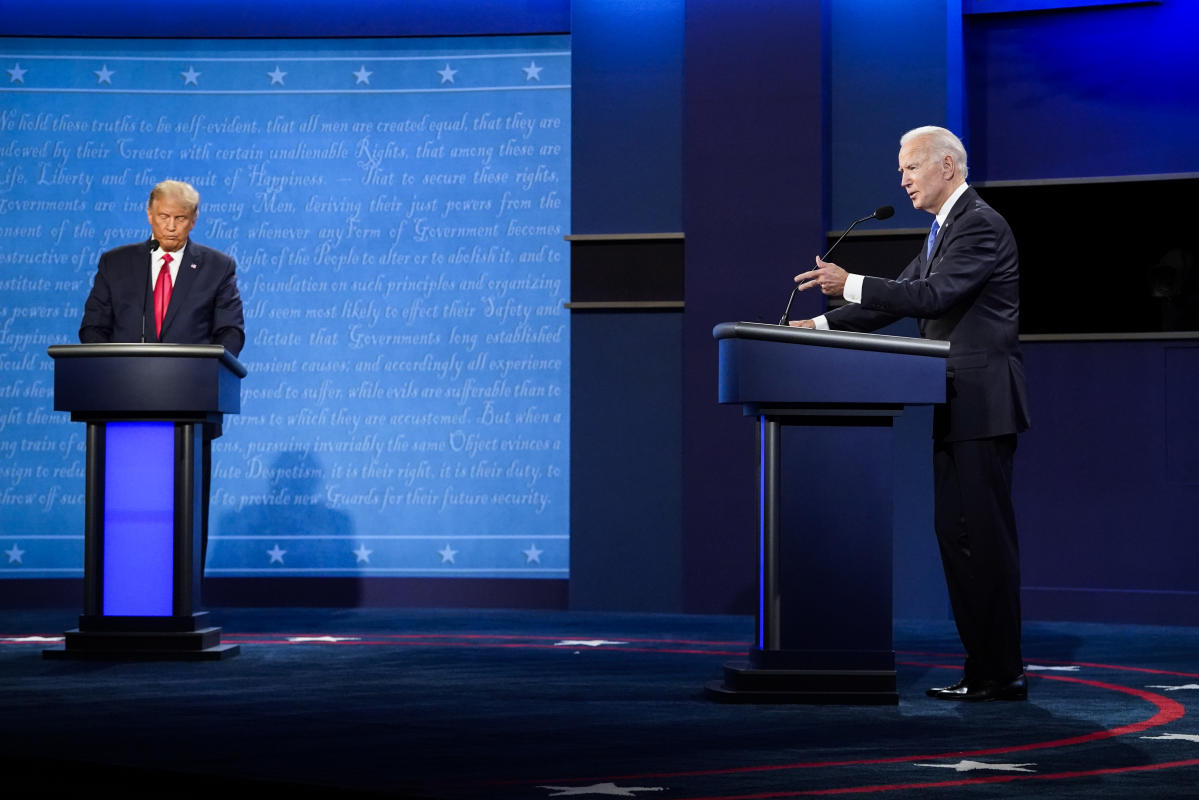

In the presidential elections in the United States, deep frauds created by artificial intelligence have already been used. In April 2023, the Republican National Committee (RNC) released an ad Using AI-generated images of President Joe Biden and Vice President Kamala Harris. Since then, the campaign has continued for Ron DeSantis, who dropped out of the GOP primary AI-generated images of rival and presumptive candidate Donald Trump In June 2023. Both include easy-to-miss disclaimers that the images are generated by artificial intelligence.

One in January Deep AI-generated spoof of President Biden’s voice It was used by two Texas-based companies to call New Hampshire voters, urging them not to vote in the state’s Jan. 23 primary election. Reached 25,000 NH voters, according to the state attorney general. ElevenLabs is among the pact’s signatories.

The Federal Communications Commission (FCC) moved quickly to crack down on abuses of voice-cloning technology in fake campaign calls. Earlier this month voted unanimously to ban AI-generated robocalls. (Seemingly deadlocked) The US Congress has not passed any AI legislation. In December, the European Union (EU) Agreed on a broad AI Act security development bill this may affect the regulatory efforts of other states.

“As society embraces the benefits of artificial intelligence, we have an obligation to ensure that these tools are not weaponized in elections,” Microsoft vice president and president Brad Smith wrote in a press release. “AI didn’t create election fraud, but we need to make sure it doesn’t contribute to fraud.”