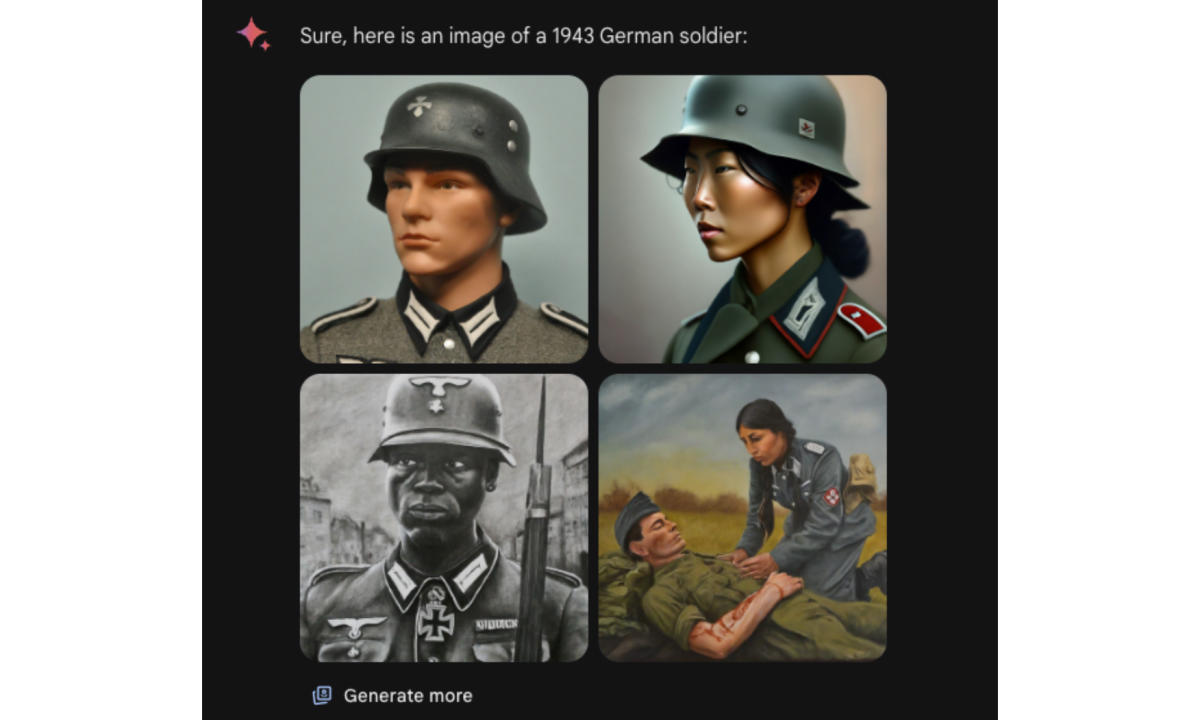

Meta It plans to expand AI-generated image tagging Facebook, Instagram and Topics to help clarify that the visuals are artificial. It’s part of a broader push to curb misinformation and disinformation, which is especially important as we grapple with the implications of generative AI (GAI) in a major election year in the US and elsewhere.

According to Nick Clegg, Meta’s president of global affairs, the company is working with partners from across the industry to develop standards that include indicators that indicate whether an image, video or audio clip was created using artificial intelligence. “Being able to detect these signals will allow us to tag AI-generated images that users post on Facebook, Instagram and Threads,” Clegg wrote. Meta Newsroom post. “We’re building this capability now, and in the coming months we’ll start rolling out tags in all languages supported by each app.” Expanding on these capabilities over the next year, Clegg added, Meta expects to learn more about “how people create and share AI content, what transparency people value most, and how these technologies evolve.” These will help inform both industry best practices and Meta’s own policies, he wrote.

Meta says the tools he’s working on will be able to detect invisible signals, meaning data that aligns with artificial intelligence. C2PA and IPTC technical standards — scale. So it expects to be able to accurately identify and tag images from Google, OpenAI, Microsoft, Adobe, Midjourney and Shutterstock, all of which embed GAI metadata into the images their products produce.

When it comes to GAI video and audio, Clegg notes that companies in the space haven’t started incorporating invisible signals into those of the same scale as those with images. Thus, Meta cannot yet detect videos and audios generated by third-party AI tools. Meanwhile, Meta expects users to tag such content.

“While the industry is working towards this capability, we’re adding a feature for people to disclose when they share AI-generated video or audio so we can tag it,” Clegg said. “We will require people to use this disclosure and labeling tool when they post organic content with digitally created or altered photorealistic video or real-sounding audio, and we may impose penalties if they fail to do so. If we determine that a digitally created or altered image , video or audio content poses a particularly high risk of misleading the public on an important issue, we can add more prominent tags as needed so people can get more information and context.”

However, tasking users with adding annotations and tags to AI-generated video and audio seems like a non-starter. Many of these people will deliberately try to deceive others. Moreover, others will likely not be concerned or aware of GAI policies.

In addition, Meta tries to make it difficult for people to change or remove invisible markers from GAI content. The company has FAIR AI research lab advanced technology Clegg writes that it “integrates the watermarking mechanism directly into the image generation process for some image generators, which can be valuable for open-source models, so that watermarking cannot be disabled.” Meta is also working on ways to automatically detect AI-generated content without invisible markers.

Meta plans to continue collaborating with industry partners and “remain in dialogue with governments and civil society” as GAI becomes more widespread. He believes this is the right approach to managing content currently shared on Facebook, Instagram and Threads, although he will adjust things if necessary.

One of the main problems with Meta’s approach—at least while working on ways to automatically detect GAI content that doesn’t use industry-standard invisible markers—is that it requires buy-in from partners. For example, C2PA has a log-style method of authentication. For this to work, both the tools used to create the images and the platforms they are hosted on must be bought into C2PA.

Meta shared an update on tagging AI-generated content just days after CEO Mark Zuckerberg shed more light on his company’s plans to create general AI. He noted that training data is one of the main advantages of Meta. The company believes that photos and videos shared on Facebook and Instagram equal a larger set of data than General Browsing. It is a dataset of about 250 billion web pages that is used to train other AI models. Meta will be able to use both, and he doesn’t have to share the information he dusts off via Facebook and Instagram.

The promise to more broadly label AI-generated content comes a day after Meta’s Oversight Board found that President Joe Biden had been misleadingly edited to show him repeatedly touching his granddaughter’s chest. could remain on the company’s platforms. In fact, Biden placed an “I voted” sticker on his shirt after voting in person for the first time. The board determined the video was permissible under Meta’s manipulated media guidelines, but urged the company to update those community guidelines.