Google just announced some nice improvements as part of the Gemini AI chatbot for Android devices the company’s I/O 2024 event. Artificial intelligence is already part of the Android operating system, allowing it to be more comprehensively integrated.

The coolest new feature wouldn’t be possible without integration with the underlying OS. Gemini now better understands the context when you manage apps on your smartphone. What exactly does this mean? Once the tool officially launches as part of Android 15, you’ll be able to bring up the Gemini overlay that sits on top of the app you’re using. This will allow for context-sensitive actions and queries.

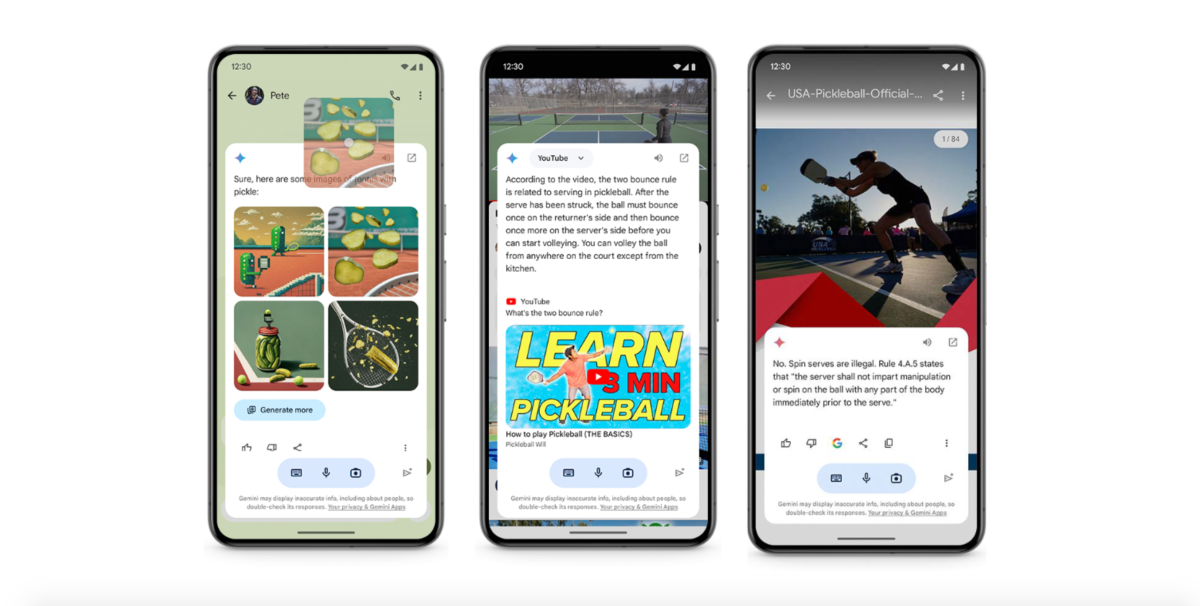

Google provides an example of how to quickly drop generated images into Gmail and Google Messages, though you may want to Stay away from historical images for now. The company also introduced a feature called “Ask This Video” that allows users to ask questions about a specific YouTube video that the chatbot should answer. Google says it should work with “billions” of videos. There is a similar tool coming for PDFs.

It’s easy to see where this technology is headed. Once Gemini has access to the largest share of your app library, it should be able to really deliver on some of the lofty promises made by it. Competing AI companies like Humane and Rabbit. Google says it’s “starting with how in-device AI can change what your phone can do,” so we imagine future integration with apps like Uber and Doordash, at the very least.

Circle to Search also gets a boost thanks to its on-board AI. Users will be able to cycle through almost anything on their phones and get relevant information. Google says people will be able to do this without changing apps. This even applies to math and physics problems, just circle the answer, which will delight students and frustrate teachers.

Stay up-to-date with all the news from Google I/O 2024 here!