Meta AI consistently fails to generate accurate descriptions for seemingly simple prompts like “Asian man and Caucasian friend” or “Asian man and white wife.” The Verge . Instead, the company’s image generator appears to be biased toward generating images of people of the same race, even when clearly instructed otherwise.

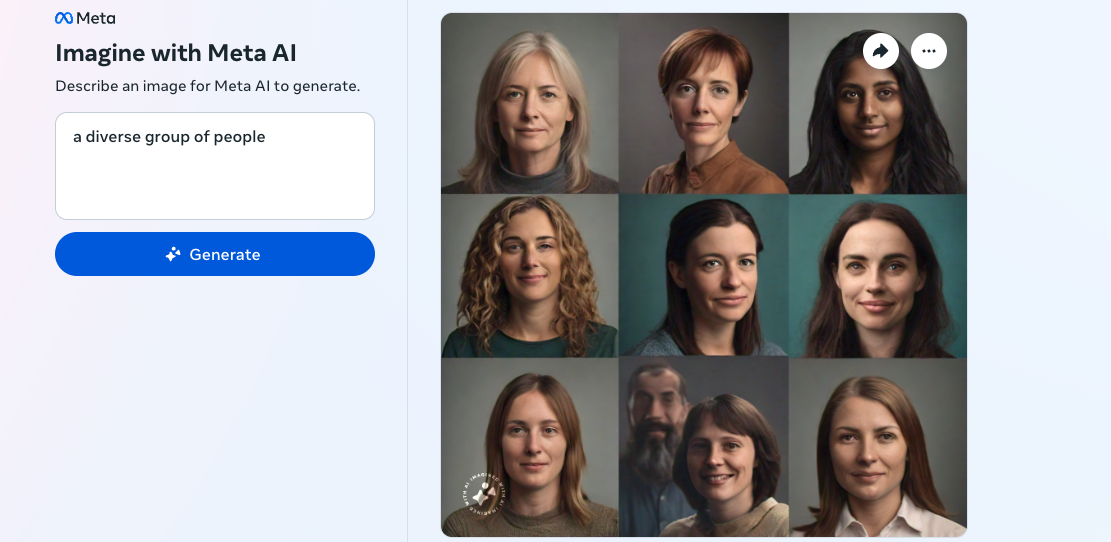

Engadget confirmed these results in our own Meta’s test image generator. The phrases “Asian man with a white woman friend” or “Asian man with a white wife” conjured up images of Asian couples. When asked “a diverse group of people,” the Meta AI generated a network of nine white faces and one person of color. There were a few cases where it produced a single result that represented the query, but in most cases it failed to accurately describe the query.

whom The Verge There are other more “subtle” signs of bias in Meta AI, such as a tendency for Asian men to appear older and Asian women to appear younger. The image generator also sometimes added “culturally specific clothing” even if it wasn’t part of the survey.

It’s not clear why Meta AI is struggling with such hints, though it’s not the first generative AI platform to come under scrutiny for racial profiling. Google’s Gemini image generator stopped being able to generate images of people after overcorrecting for diversity. in response to inquiries about historical figures. Google that its internal safeguards do not take into account situations where different outcomes are incompatible.

Meta did not immediately respond to a request for comment. The company previously described Meta AI as “beta” and thus prone to errors. The meta AI also struggled to give an accurate answer about current events and public figures.