It’s been five months since President Joe Biden signed the order (EO) to address the rapid advances in artificial intelligence. The White House today takes another step forward in implementing the EO with a policy aimed at regulating the federal government’s use of artificial intelligence. Safeguards that agencies must implement include, among other things, ways to reduce the risk of algorithmic bias.

“I believe that all leaders from government, civil society, and the private sector have a moral, ethical, and social responsibility to make sure that AI is adopted and developed in a way that protects the public from potential harm. its benefits,” Vice President Kamala Harris told reporters during a press conference.

Harris announced three mandatory requirements under the new Office of Management and Budget (OMB) policy. First, agencies must ensure that the AI tools they use “do not endanger the rights and safety of the American people.” They have until Dec. 1 to put in place “concrete safeguards” to make sure the AI systems they use don’t affect the safety or rights of Americans. Otherwise, the agency would have to stop using the AI product unless its leaders could justify that removing the system would have an “unacceptable” impact on critical operations.

Impact on the rights and security of Americans

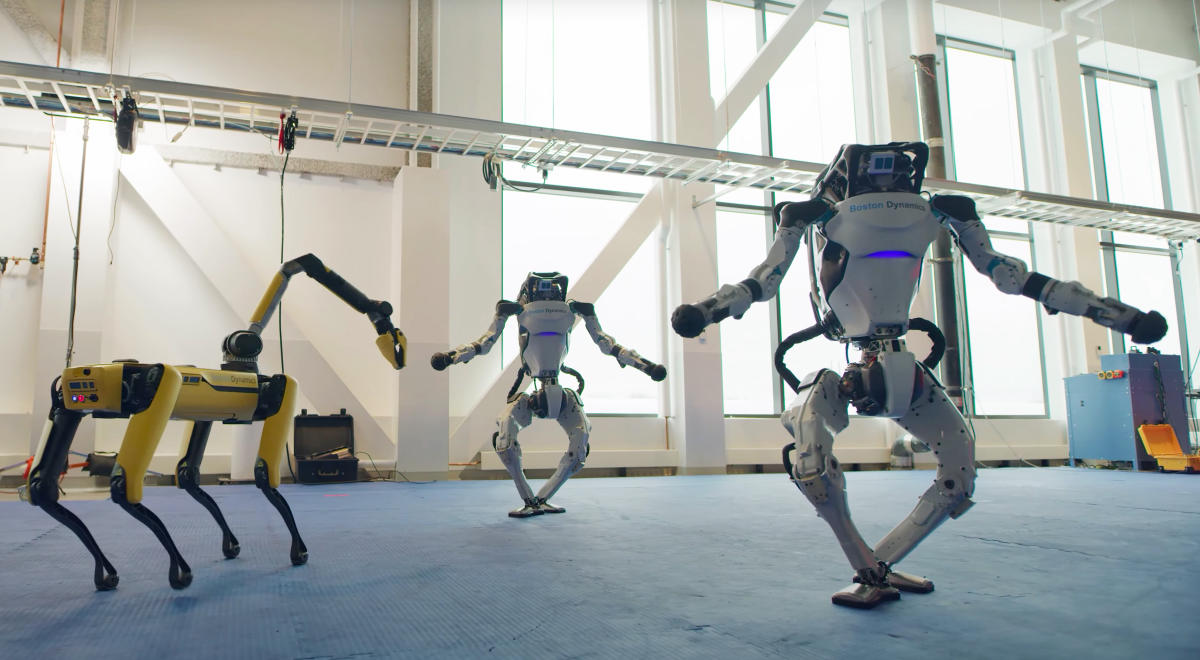

According to the policy, an artificial intelligence system is considered to have an impact on security if it is “used or expected to be used in a real-world setting” to control or significantly influence the outcome of certain actions and decisions. These include protection of election integrity and voting infrastructure; monitor critical safety functions of infrastructure such as water systems, emergency services and power grids; autonomous vehicles; and control the physical movements of robots “in the workplace, school, housing, transportation, medical or law enforcement.”

Agencies will also have to abandon AI systems that violate Americans’ rights unless they have adequate safeguards or can otherwise justify their use. The purposes for which the policy is intended to affect rights include predictive policing; social media monitoring for law enforcement agencies; detection of plagiarism in schools; block or restrict protected speech; detect or measure human emotions and thoughts; pre-employment screening; and “reproducing a person’s likeness or voice without express consent.”

When you arrive generative AI, policy involves evaluating the potential benefits of organizations. They must also all “establish adequate safeguards and controls that allow generative AI to be used without posing undue risk to the agency.”

Transparency requirements

The second requirement would force agencies to be transparent about the AI systems they use. “Today, President Biden and I require that every year U.S. government agencies publish online a list of their AI systems, an assessment of the risks those systems may pose, and how those risks are managed,” Harris said.

As part of that effort, agencies will have to publish government-owned AI code, models and data. If an agency can’t disclose specific AI uses for sensitivity reasons, they’ll still have to report metrics.

Finally, federal agencies must have internal controls over their use of artificial intelligence. This includes each department appointing a chief artificial intelligence officer to oversee an agency’s use of artificial intelligence. “This is to make sure that AI is used responsibly, that we need to have senior leaders in our government who are specifically tasked with overseeing the adoption and use of AI,” Harris said. By May 27, many agencies must also have governing boards on artificial intelligence.

Prominent figures from the public and private sectors (including civil rights leaders and computer scientists) helped shape the policy, along with business leaders and legal scholars, the vice president added.

OMB suggests that by adopting security measures, the Transportation Security Administration may have to allow airline travelers to opt out of facial recognition scans without losing their seat in line or experiencing delays. It also suggests human oversight of things like AI fraud detection and diagnostic decisions in the federal health care system.

As you can imagine, government agencies are already using AI systems in a variety of ways. The National Oceanic and Atmospheric Administration is working on artificial intelligence models to help more accurately predict extreme weather, flooding and wildfires, while the Federal Aviation Administration is using a system to help manage air traffic in major metropolitan areas to improve travel times.

OMB Director Shalanda Young told reporters that “AI is not only a risk, but also a huge opportunity to improve public services and make progress on societal challenges such as addressing climate change, improving public health, and developing equitable economic opportunity.” “When used and monitored responsibly, AI can help agencies reduce wait times for critical government services to improve accuracy and expand access to essential government services.”

The policy is the latest in a series of efforts to regulate the fast-growing field of artificial intelligence. While in the European Union adopted a broad set of rules for the use of AI on the blockand there is federal laws in the pipeline, efforts to regulate AI in the US have taken a more patchwork approach at the state level. This month, Utah passed the law to protect consumers from AI fraud. Tennessee’s Sound and Picture Security Act (aka the Elvis Act – seriously) try protecting musicians from deepfakes, i.e. owning their sound cloned without permission.