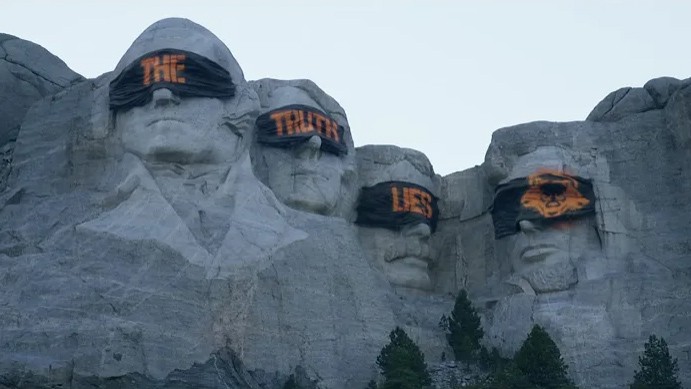

In an age where fraudsters are using generative artificial intelligence fraudulent money or tarnish his reputation, tech firms are offering ways to help users check content — not least still images. Like his joke 2024 disinformation strategyOpenAI now includes source metadata in images created with the ChatGPT and DALL-E 3 APIs on the web, with their mobile counterparts receiving the same upgrade by February 12th.

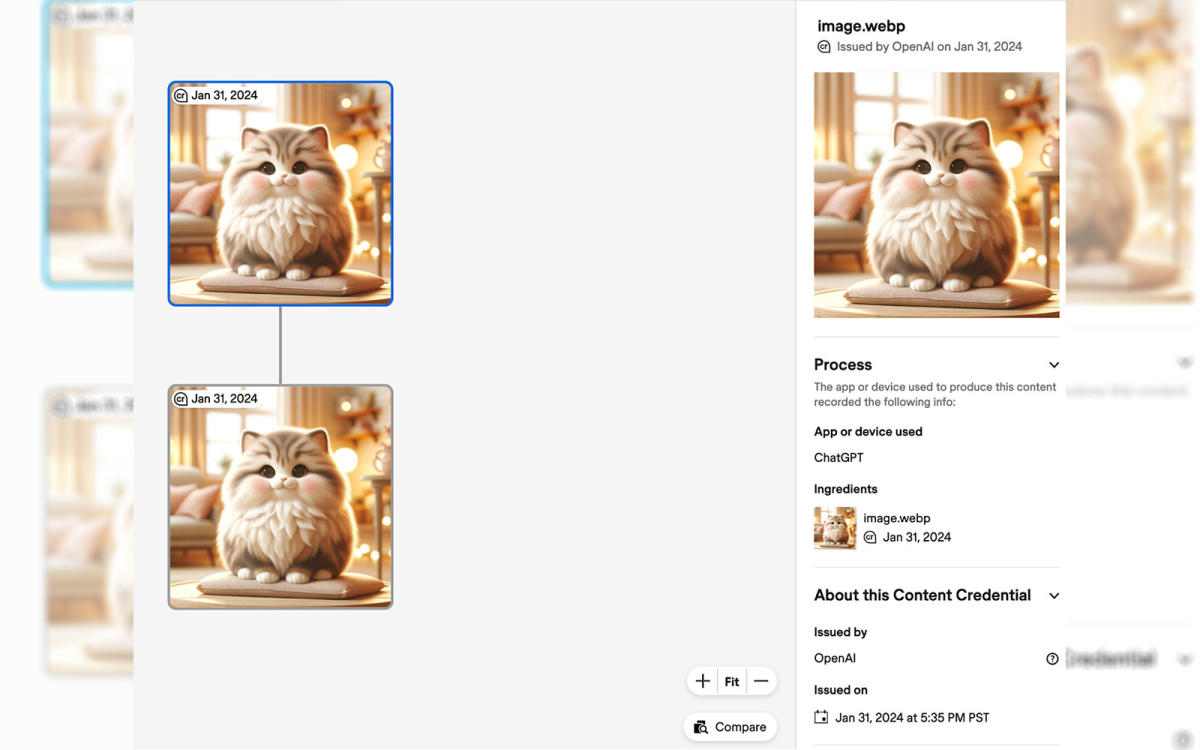

Metadata conforms to the C2PA (Coalition for Content Origin and Authenticity) open standard and when such an image is uploaded Verify Content Credentials instrument, you will be able to trace its lineage. For example, an image generated using ChatGPT will show an initial metadata manifest indicating its DALL-E 3 API origin, followed by a second metadata manifest indicating that it appears in ChatGPT.

Despite the sophisticated cryptographic technology behind the C2PA standard, this verification method only works if the metadata is intact; As with any screenshot or image uploaded to social media, the tool is useless if you upload an AI-generated image without metadata. Not surprisingly, actual sample images official DALL-E 3 page also returned empty. On it Frequently asked questions pageOpenAI admits it’s not a silver bullet to solve the disinformation war, but it believes the key is to encourage users to actively seek out such signals.

While OpenAI’s latest efforts to prevent fake content are currently limited to still images, Google’s DeepMind has already SynthID for digitally watermarking both images and audio generated by artificial intelligence. Meanwhile, Meta is experimenting with an invisible watermark through himself AI image generatorit may be less prone to interference.