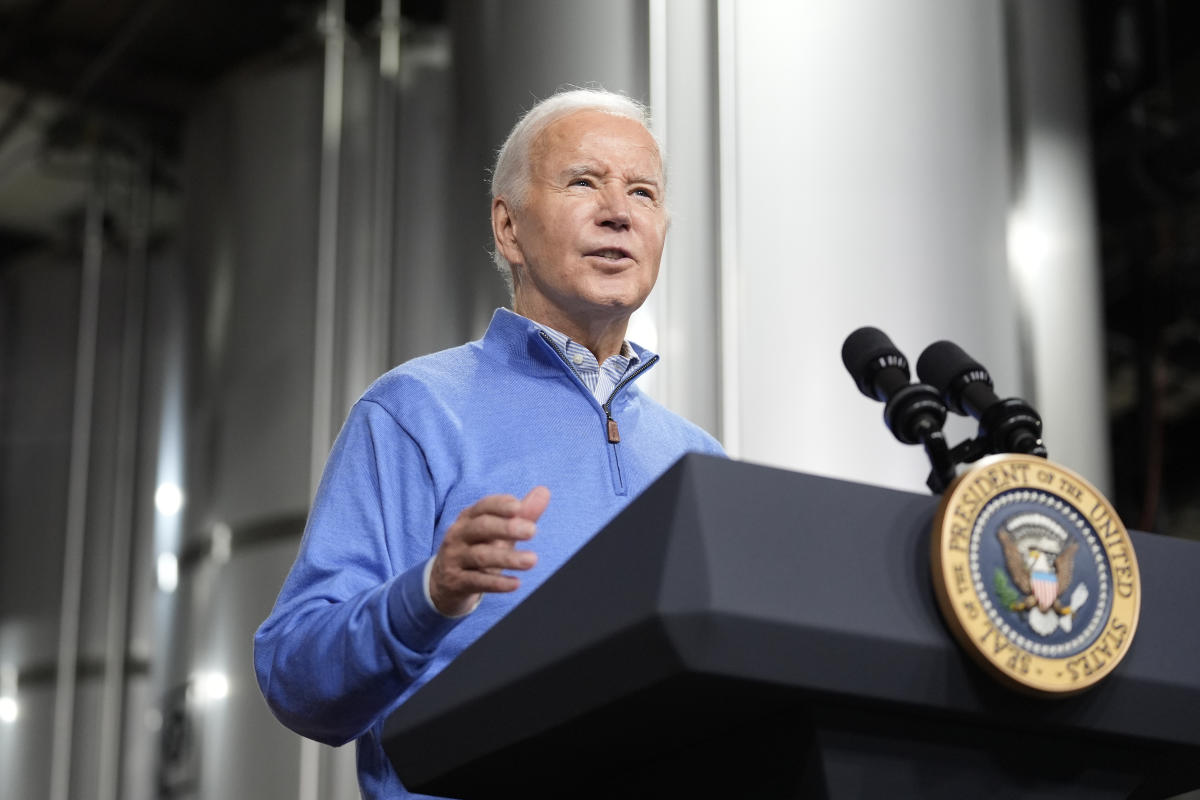

ElevenLabsAn artificial intelligence startup that offers voice cloning services with its tools has banned a user who created an audio deepfake of Joe Biden used to disrupt the election. Bloomberg. Last week, a robocall in New Hampshire telling some voters not to vote in their state’s primary election used audio of the president’s identity. It was initially unclear what technology was used to copy Biden’s voice, but a comprehensive analysis by security company Pindrop showed that criminals were using tools from ElevanLabs.

The security firm removed background noise and cleaned up the robocall’s audio before comparing it to samples from more than 120 voice synthesis technologies used to create deep fakes. Pindrop CEO Vijay Balasubranian informed about this Wired he said, “more than 99 percent of what went back north was ElevenLabs.” Bloomberg says the company has been notified of Pindrop’s findings and is still investigating, but it has already identified and suspended the account that created the fake audio. ElevenLabs told the news organization that it could not comment on the matter itself, but that it is “dedicated to preventing abuse of audio AI tools and [that it takes] any abuse is extremely serious.”

The deeply fake Biden robocall shows how technologies that can mimic someone else’s likeness and voice can be used to manipulate votes in the upcoming US presidential election. “This is just the tip of the iceberg of what can be done about voter suppression or attacks on election workers,” said Kathleen Carley, a professor at Carnegie Mellon University. Hill. “It was pretty much a harbinger of all sorts of things to expect over the next few months.”

Only it took a few days for the internet After ElevenLabs launched a beta version of its platform, it began using it to create audio clips that sounded like celebrities reading or saying something questionable. The startup lets customers use its technology to clone voices for “artistic and political speech that contributes to public debate.” His security page warns users that they “may not clone audio for abusive purposes such as fraud, discrimination, hate speech or any form of online abuse without breaking the law”. But it is clear that more safeguards must be in place to prevent bad actors from using their tools to influence voters and manipulate elections around the world.

This article contains affiliate links; we may earn a commission if you click on such a link and make a purchase.