Liz Reid, the head of Google Search, admitted that the company’s search engine returned some “strange, inaccurate or useless information. AI Reviews“after them It spread to everyone in the United States. The executive posted an explanation for Google’s more unusual AI-generated responses. blog postwhere he also announced that the company is implementing safeguards to help the new feature return more accurate and less memory-intensive results.

Reid defended Google and pointed out that some of the more egregious AI Review responses, such as claims that it’s safe to leave dogs in cars, are bogus. “How many rocks should I eat?” viral screenshot showing the answer to the question. is real, but he said that Google came up with an answer because a website published satirical content that tackled the topic. “Before these screenshots went viral, almost nobody asked Google that question,” he explained, so he linked the company’s AI to that website.

The Google VP also confirmed that the AI Review was recommending that people use glue to get cheese to stick on pizza based on content taken from the forum. Forums usually provide “real, first-hand information,” he said, but they can also lead to “unhelpful advice.” The executive didn’t mention the other viral AI Review answers, but as it is The Washington Post The technology reportedly told users that Barack Obama is a Muslim and that people should drink a lot of urine to help pass kidney stones.

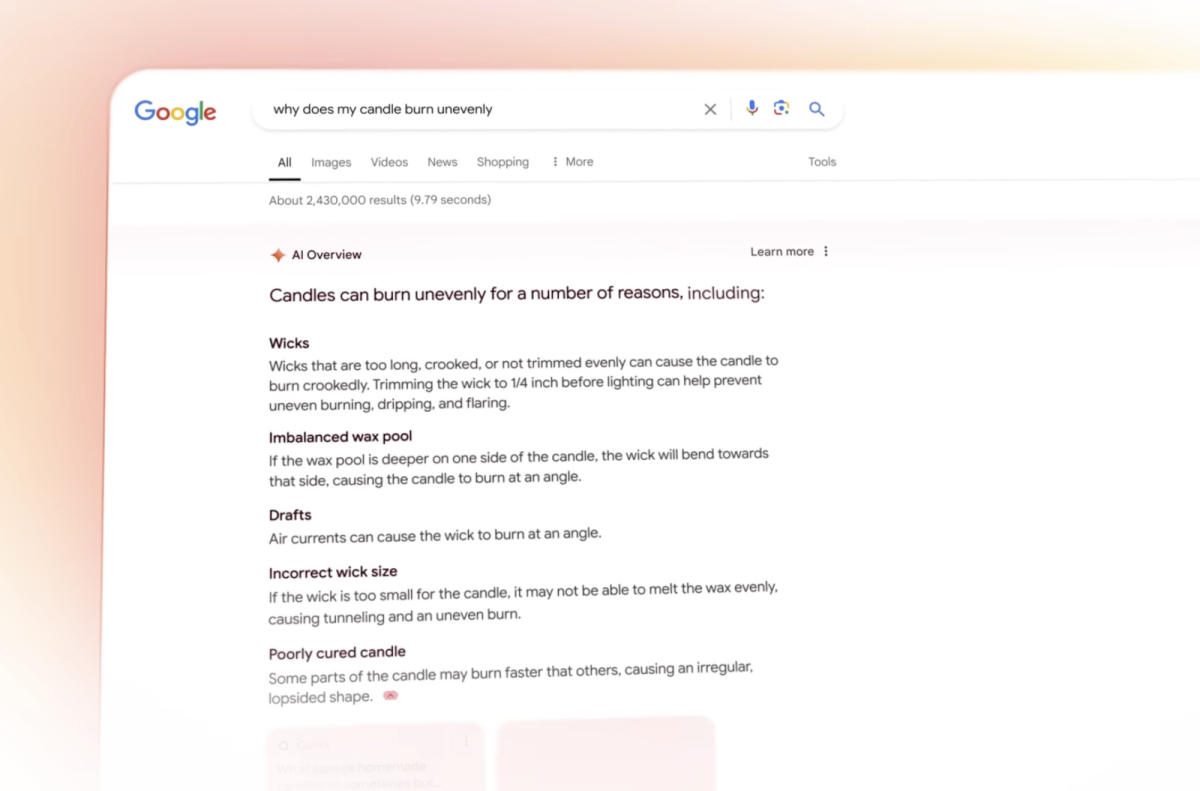

Reid said the company tested the feature extensively before the launch, but “there’s nothing like millions of people using the feature with a lot of new searches.” Google was apparently able to identify instances where its AI technology didn’t get things right by looking at patterns of responses over the past few weeks. It then applied protection based on its observations, starting to tune its AI to better detect humor and satirical content. It has also updated its systems to limit the addition of user-generated responses to Reviews, such as social media and forum posts, which could lead people to give misleading or even harmful advice. In addition, it also “added trigger restrictions for queries where AI Insights are not very useful” and stopped showing AI-generated answers for certain health topics.